Understood on the code side.

Just to clarify, English is my second language and I’m still acquiring vocabulary. The older the text is, the more words I don’t know. I’m struggling with Shakespeare. I’m lost with Chaucer.

The examples I gave are from Tom Sawyer by Mark Twain. The last time I read Mark Twain was in High School when I was in 10th grade. This text is rated as an A2 by Readlang. I don’t know how these grades are calculated but this is what I see in the my library view.

Is an A2 text that is read in the 10th grade an advanced text? Is the expectation that Readlang should give the correct translations of an A2 texts too much to ask? Would most language learners be fine with getting incorrect meanings of an A2 texts?

There are multiple layers to this and I’ve talked about many different ideas. Reading texts with AI, using advanced models, etc. In this particular case, I’m just pointing out that the current translations of an A2 text that is read in 10th grade are incorrect. If an English teacher tries out the text she assigned in class on Readlang, would she recommend it to her students?

Part of the reason I’m reading in English with Readlang is to expand my vocabulary, but also to test it, to see how it works. It’s easier for me to figure out whether the translation works well in English than in Spanish or German. So I’m pointing things out in English because I know it the most.

If I use it in German, can I read Goethe? Can I trust it? Or can I read Mozart’s Zeuberflote libretto? That text is over 200 years old and I was assigned to read it in my 2nd year of German. Would my German professor be comfortable with the translation I see on Readlang. Again, this is after 1 year of studying German, so still a beginner stage.

The bar I’m talking about in this particular case is very low. It’s about translating beginner texts. Is getting a large % of those words wrong acceptable to most language learners?

Again, I’m a big fan and believer in this project. The interface is great, the concept is great, the LLM models work if use right, but the error rate on an A2 text is just too high. I’m talking about the basics here. The other things I talked about in the other threads will happen, whether we do them here or elsewhere, but in this thread I’m just talking about the basics, getting the correct meaning in context.

Here’s why this is dangerous. If I look a word in the dictionary, and I see 5 definitions, I’m aware that there are 5 definitions and I’ll make a judgement on which one is most suited. But if I believe that the model will pick the correct definition for me in this context and I’m trusting the model, then I will just assimilate that definition. And a certain percentago of those words will be incorrect. My knowledge of the language will be faulty. And I’ll have to spend significantly more effort ulearning the wrong definitions and learning the correct ones.

Again, if we can’t trust the translations of an A2 text, it undermines the entire project.

Honestly, I don’t think this is doable without enlarging the context. When we read, we have the larger context. When we look at a word in the dictionary and see 5 or 10 meanings, we have the larger context in our head. Why would we expect that the models could pick the right definition without the context? We can go further, I don’t think people can pick up the correct definitions based just on a small sentence in many cases. Why would we ask the model to do something that a person couldn’t do?

What I’m doing here is challenging the assumption that a one sentence context is enough. I would argue that it’s not. The LLM models can’t do it. People can’t do it. It works in most cases, but is most cases good enough. If I’m teaching you a language and 5% of the words I’m teaching are incorrect, is that an acceptable threshold? (I don’t know if it’s 5% or 2%, but I’d like that to be close to 0).

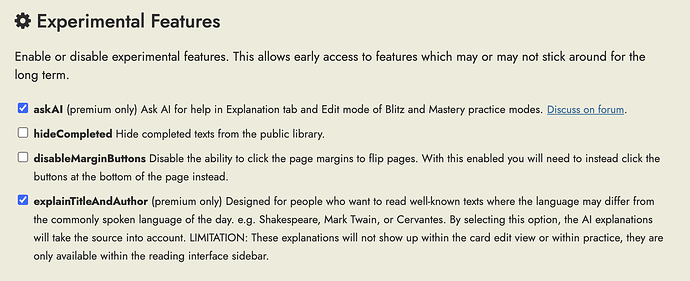

Since we’re trying to figure these things out, could we expand the context for the experimental feature if that’s easier? We can play with that for a while, figure out how large the context should be and see how it works. If we can get that section to work properly, then I can at least rely on that until we figure a better way for the rest of the features.

Thank you!